Firefighting robot competition

I was in a team of five that was competing in a robot competition, held at my university. The competition was similar to the famous robot firefighting contest held at Trinity College. The goal of the competition was to build an autonomous robot that could navigate in a simple model of a building. The building consisted of four rooms. In one of the rooms, there was a lit candle representing a raging fire. The robot's task was to autonomously navigate through the building to find the candle and put it out.

Even though we officially did not manage to put out the candle, we did pretty well and came in second place in the competition. As you can see in the video below, the robot managed to put out the candle with some help. 😉

Let's get into some more technical details!

The mechanics

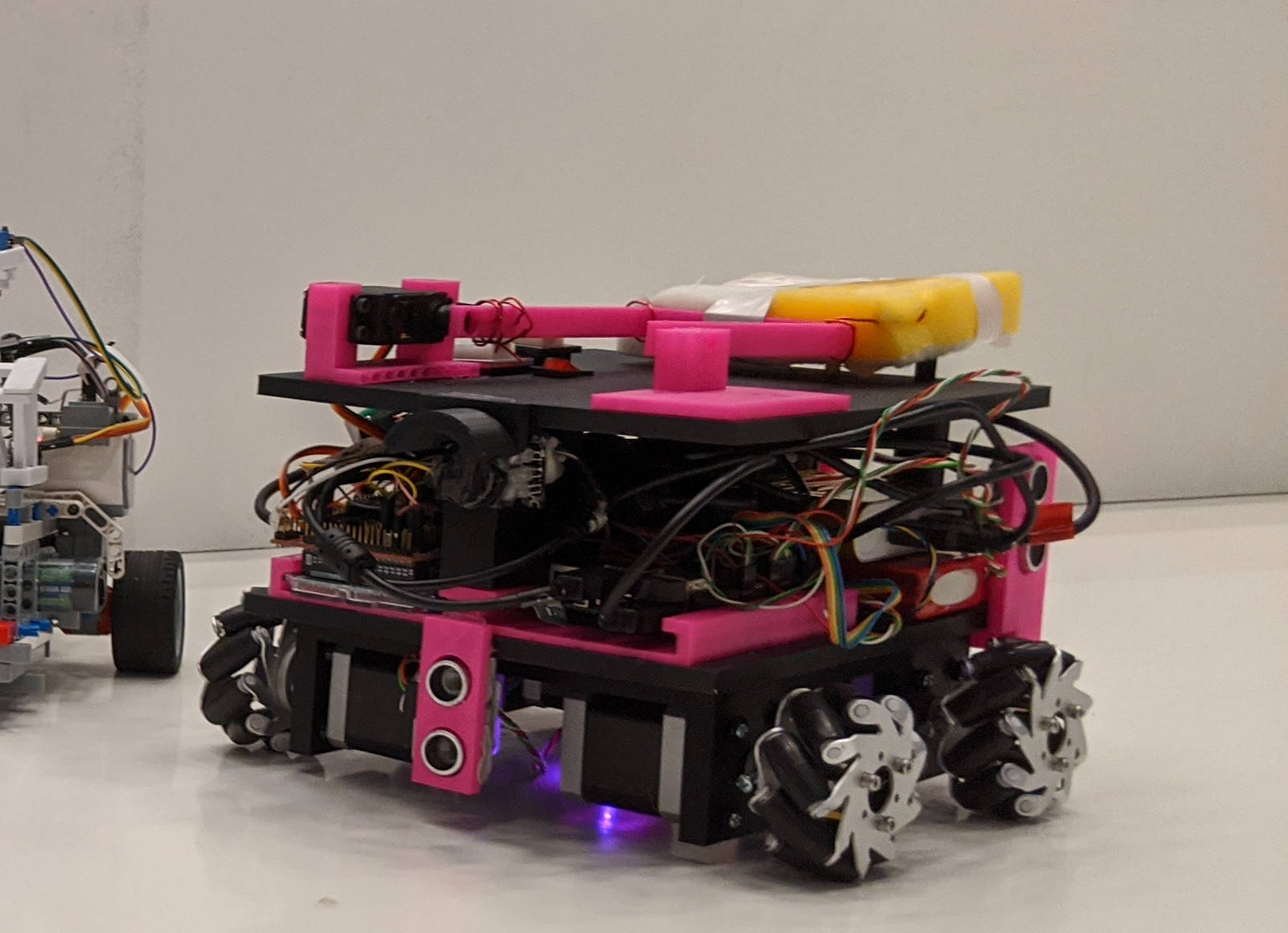

Another group member designed and 3d-printed the body of the robot. The robot also used special wheels called mecanum wheels, which allows it to move in any direction and rotate on the spot. Each wheel was driven by its own stepper motor. To control the arm with the sponge, a small servo was used.

The electronics

The electronics used were a Raspberry Pi, an Arduino with a 3d-printer control card to control the stepper motors, four ultrasonic distance sensors to measure the distances to the walls and a camera with its IR-filter removed to detect the burning candle.

To power everything, a combination of USB power banks and Li-Po batteries were used.

The software

For the Arduino, I wrote a custom program to control multiple steppers in parallel. I also used my knowledge in control theory to ensure smooth acceleration at all times. This was to avoid slippage of the wheels and thus improve the dead reckoning of the robot's position. To achieve this, I put a digital low-pass filter in front of the stepper velocity signal. Then I developed an LQ-controller around that to be able to control the absolute position of the steppers smoothly. The Raspberry Pi could then command the Arduino via serial to move the robot with constant velocity or to a specific absolute position.

On the Raspberry Pi, a we used a rather involved software stack utilizing Linux, Docker and ROS2. Different ROS2 nodes were used to control and interface with the hardware and the Arduino. To explore the building, it was modeled as a maze. As the robot traverses the maze it builds up an internal graph of the maze and traverses it using depth-first-search. Detection of cycles in the graph was done through the robot estimating its absolute position using dead reckoning of the stepper motors. When the robot was close to a node that it had already visited, a cycle in the graph was detected. This worked quite well in practice!

There were bonus points for starting the robot when it detected a sine wave with a frequency of 1kHz. Using the principles of detection theory, I derived an optimal detector of a pure sine-wave with unknown amplitude and phase offset. The detection was tuned by setting the signal-to-noise ratio used in the detector. This was implemented in a ROS2 node that was connected to a microphone.

For detecting the candle, a ROS2 node using OpenCV was used to detect round bright objects.